Like printing, just better.

Save hundreds of dollars per user every year, ditch print servers and printer drivers while minimizing risk to networks and printers. For all devices, apps and desktops.

Unlock printing for the hybrid workplace now.

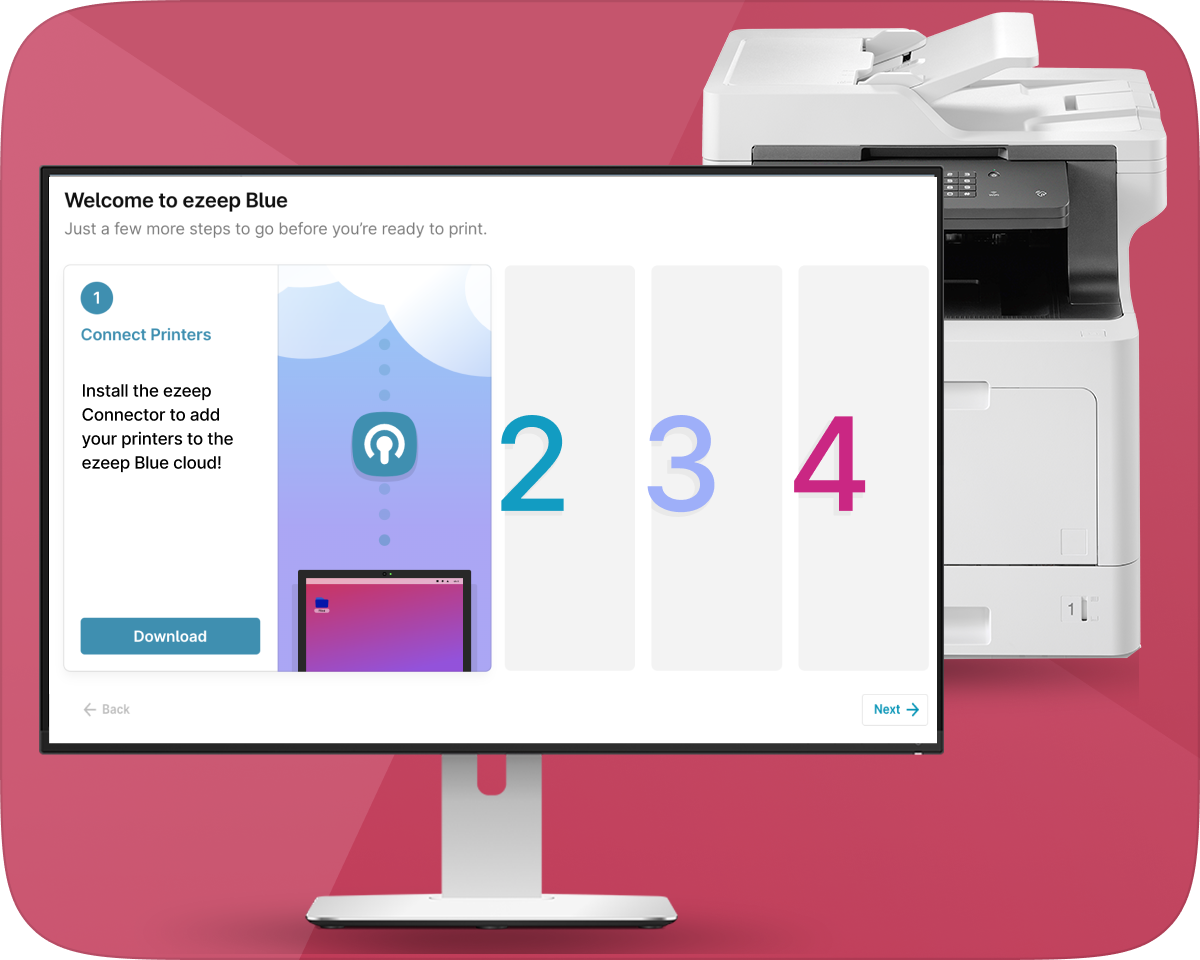

ezeep Blue delivers reliable printing to any printer without installing printer drivers. Just sign up and connect your printers with just a few clicks.

✓ Network printers

✓ Mobile printers

✓ Home office printers

✓ Public shared printers

✓ Label printers

✓ Point-of-sales printers

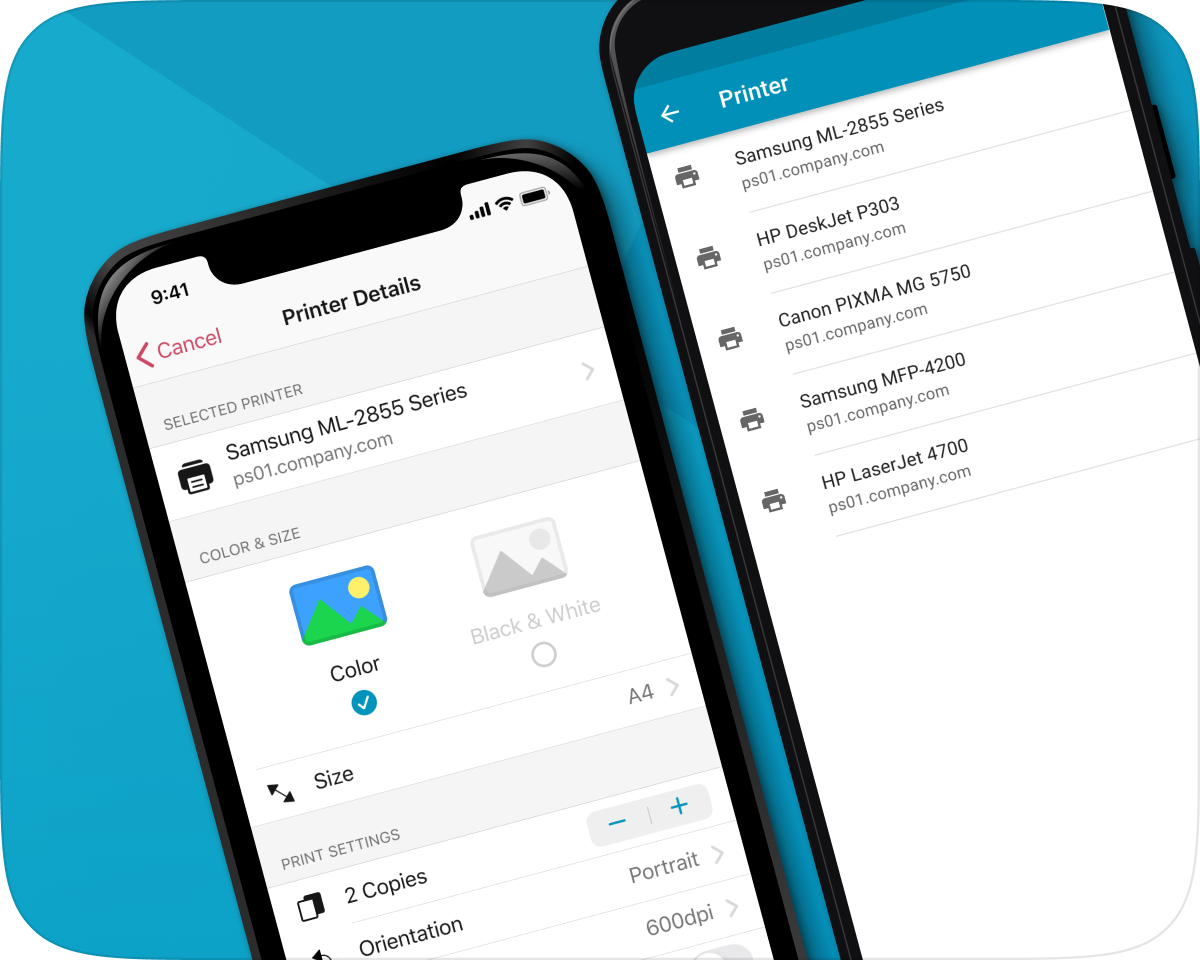

Our ezeep apps deliver a beautiful mobile printing experience for iOS and Android. Mac, Windows and Chrome users print right from their desktop applications without installing any printer drivers.

The complicated management of print servers, print drivers and devices is over. Set up ezeep Blue with a few clicks to simplify and automate print management with our intuitive web app.

Industry-standard security measures ensure our infrastructure and software are secure and compliant with EU-GDPR, while Pull Printing provides document confidentiality for admins and users.

We offset the CO2 produced when printing via our platform. Learn how we do this with justdiggit.org.

ezeep Blue helps to bring an end to uncollected paper documents. Our pull printing feature makes sure that each printout is only released once the user is at the printer.